A simpler path to better computer vision | MIT News

Just before a device-studying model can full a endeavor, these types of as figuring out most cancers in health care illustrations or photos, the model will have to be skilled. Coaching image classification designs typically includes exhibiting the model thousands and thousands of case in point visuals gathered into a significant dataset.

On the other hand, using true graphic facts can elevate functional and ethical concerns: The images could operate afoul of copyright legislation, violate people’s privacy, or be biased against a sure racial or ethnic team. To avoid these pitfalls, researchers can use image era packages to develop synthetic data for design coaching. But these procedures are minimal since professional awareness is often needed to hand-style an graphic generation system that can generate successful education information.

Scientists from MIT, the MIT-IBM Watson AI Lab, and elsewhere took a distinct tactic. Alternatively of designing custom-made picture era applications for a specific education activity, they gathered a dataset of 21,000 publicly out there systems from the internet. Then they made use of this large assortment of standard picture era packages to practice a personal computer vision model.

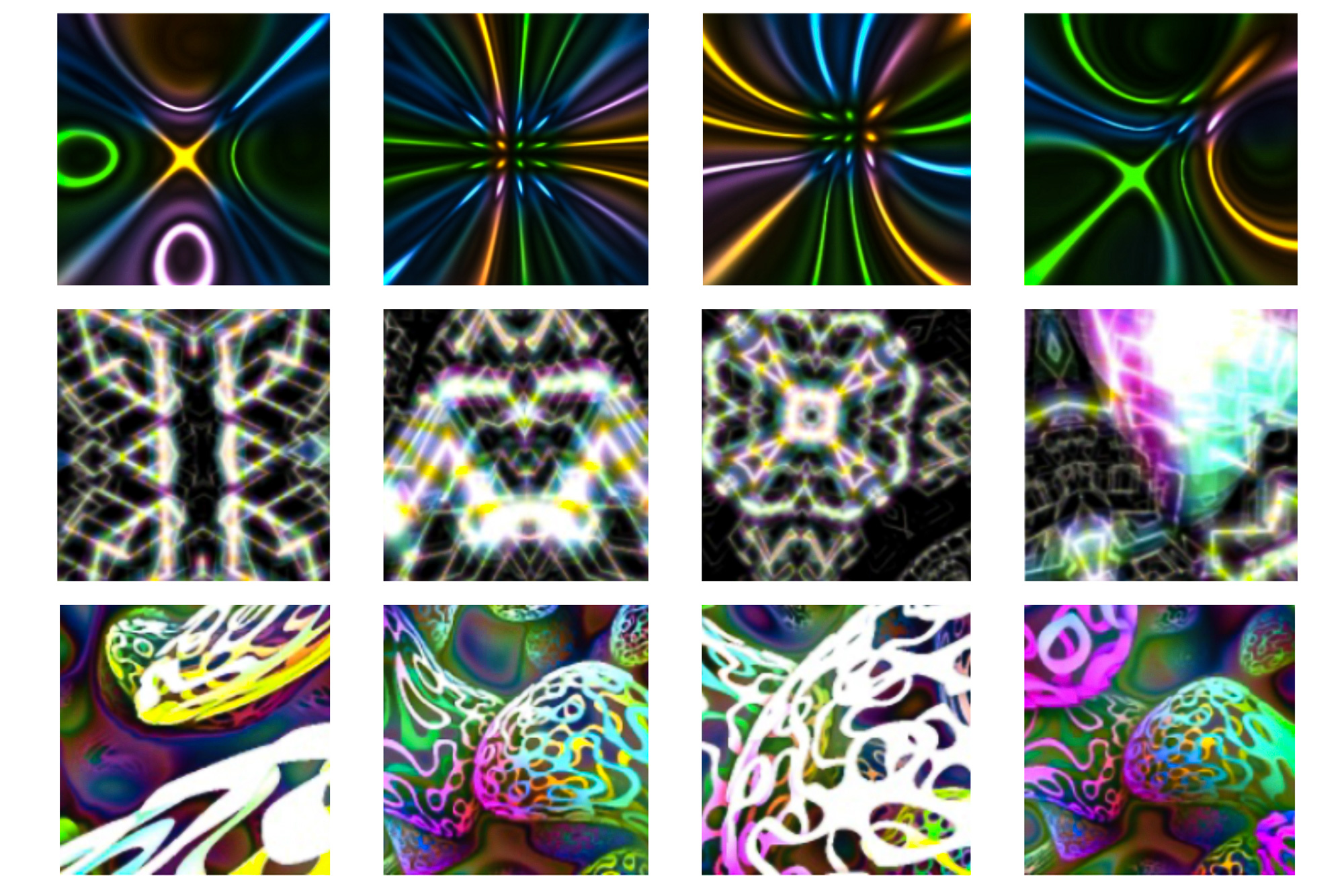

These applications make various photographs that display uncomplicated hues and textures. The researchers did not curate or change the applications, which each comprised just a number of traces of code.

The styles they skilled with this massive dataset of courses categorised illustrations or photos much more precisely than other synthetically qualified products. And, while their designs underperformed those people qualified with real details, the scientists showed that growing the quantity of image systems in the dataset also amplified product general performance, revealing a route to attaining bigger precision.

“It turns out that applying lots of plans that are uncurated is essentially greater than utilizing a smaller established of courses that persons want to manipulate. Knowledge are significant, but we have proven that you can go quite far without the need of actual knowledge,” says Manel Baradad, an electrical engineering and computer system science (EECS) graduate student performing in the Pc Science and Synthetic Intelligence Laboratory (CSAIL) and guide writer of the paper describing this method.

Co-authors incorporate Tongzhou Wang, an EECS grad university student in CSAIL Rogerio Feris, principal scientist and supervisor at the MIT-IBM Watson AI Lab Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer system Science and a member of CSAIL and senior writer Phillip Isola, an associate professor in EECS and CSAIL alongside with some others at JPMorgan Chase Lender and Xyla, Inc. The study will be introduced at the Conference on Neural Info Processing Devices.

Rethinking pretraining

Device-learning designs are usually pretrained, which means they are qualified on one dataset initial to aid them make parameters that can be applied to deal with a unique task. A model for classifying X-rays may well be pretrained making use of a substantial dataset of synthetically generated images in advance of it is skilled for its actual undertaking applying a a lot more compact dataset of true X-rays.

These scientists beforehand showed that they could use a handful of graphic technology applications to create synthetic data for design pretraining, but the systems desired to be carefully intended so the synthetic pictures matched up with specified qualities of actual images. This made the method tough to scale up.

In the new do the job, they applied an massive dataset of uncurated graphic technology plans instead.

They started by accumulating a selection of 21,000 photos generation systems from the world wide web. All the courses are prepared in a uncomplicated programming language and comprise just a couple snippets of code, so they make visuals fast.

“These plans have been built by developers all above the earth to develop photographs that have some of the homes we are interested in. They develop photos that glimpse kind of like abstract artwork,” Baradad clarifies.

These straightforward systems can operate so speedily that the scientists didn’t have to have to make photos in advance to practice the product. The scientists uncovered they could create photos and coach the model concurrently, which streamlines the method.

They employed their substantial dataset of picture era applications to pretrain computer eyesight models for both equally supervised and unsupervised impression classification tasks. In supervised finding out, the impression info are labeled, though in unsupervised learning the model learns to categorize images without the need of labels.

Enhancing accuracy

When they in comparison their pretrained models to state-of-the-artwork laptop or computer eyesight styles that experienced been pretrained employing synthetic knowledge, their designs ended up extra correct, indicating they put photos into the proper classes additional usually. Whilst the precision levels had been nevertheless less than models educated on authentic data, their strategy narrowed the efficiency gap amongst styles experienced on genuine info and individuals experienced on artificial data by 38 {f5ac61d6de3ce41dbc84aacfdb352f5c66627c6ee4a1c88b0642321258bd5462}.

“Importantly, we present that for the selection of packages you acquire, performance scales logarithmically. We do not saturate efficiency, so if we obtain more systems, the product would execute even much better. So, there is a way to extend our tactic,” Manel claims.

The scientists also made use of every person image generation plan for pretraining, in an energy to uncover components that contribute to design precision. They discovered that when a program generates a more various established of illustrations or photos, the product performs improved. They also identified that vibrant visuals with scenes that fill the complete canvas are likely to increase model overall performance the most.

Now that they have demonstrated the achievement of this pretraining approach, the scientists want to increase their approach to other sorts of facts, this kind of as multimodal data that incorporate text and images. They also want to carry on discovering means to enhance image classification general performance.

“There is however a hole to close with styles properly trained on real facts. This gives our study a direction that we hope many others will follow,” he states.