‘Mind-reading’ AI: Japan study sparks ethical debate | Technology News

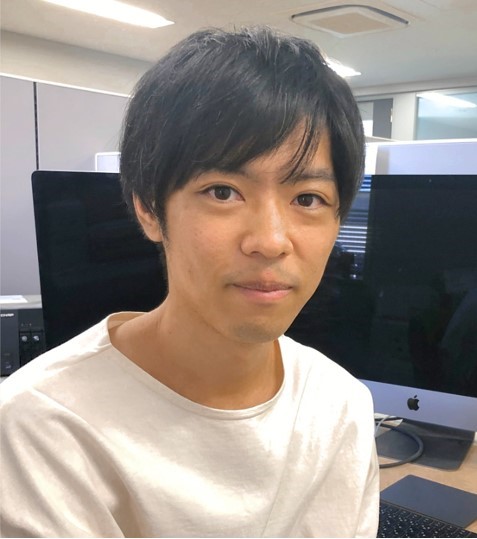

Tokyo, Japan – Yu Takagi could not feel his eyes. Sitting by yourself at his desk on a Saturday afternoon in September, he watched in awe as artificial intelligence decoded a subject’s brain action to develop photographs of what he was seeing on a display.

“I nonetheless try to remember when I observed the first [AI-generated] visuals,” Takagi, a 34-12 months-previous neuroscientist and assistant professor at Osaka College, advised Al Jazeera.

“I went into the rest room and seemed at myself in the mirror and observed my encounter, and considered, ‘Okay, that’s usual. Maybe I’m not likely crazy’”.

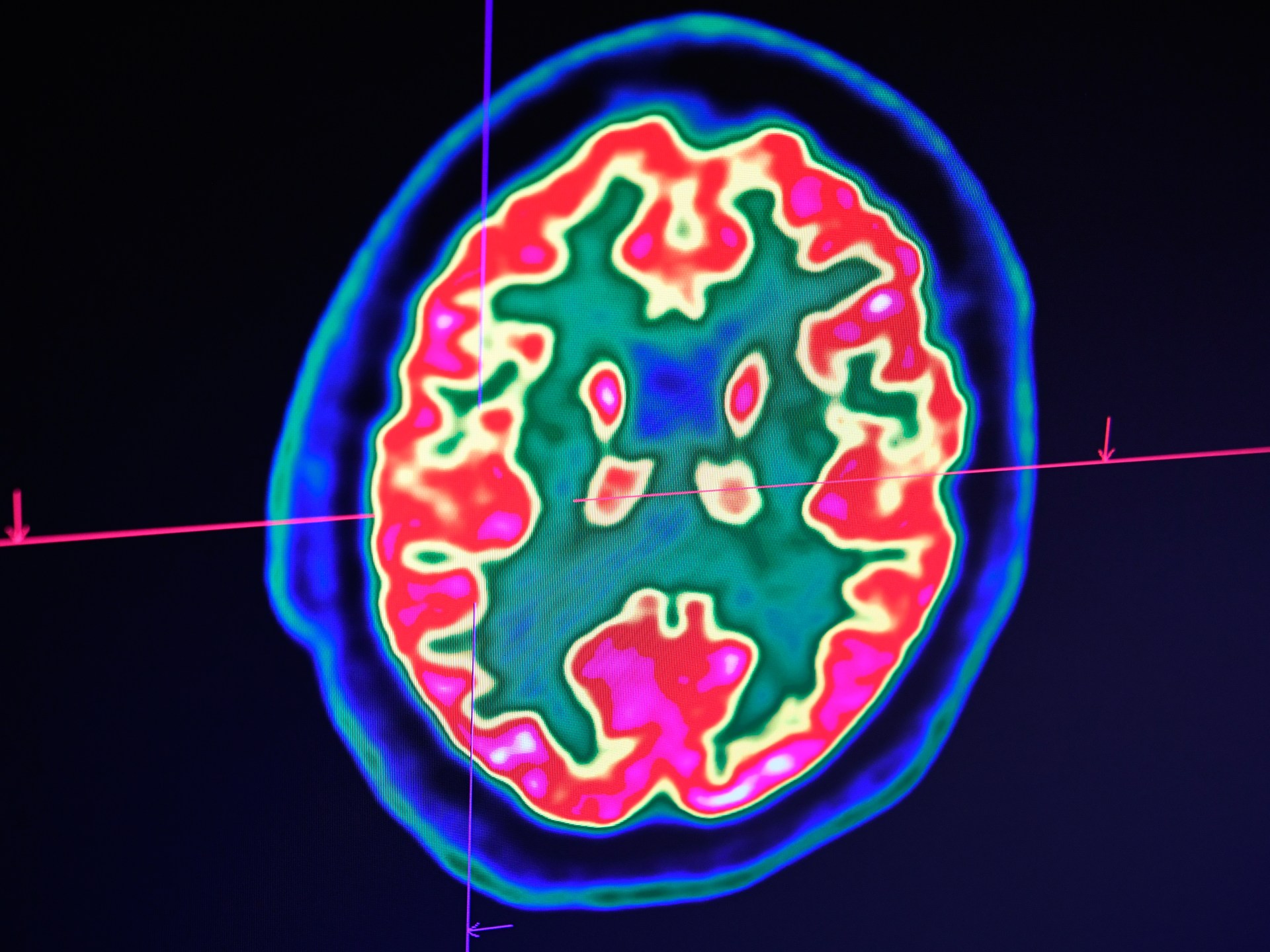

Takagi and his team made use of Stable Diffusion (SD), a deep discovering AI model made in Germany in 2022, to analyse the brain scans of take a look at topics proven up to 10,000 pictures even though within an MRI device.

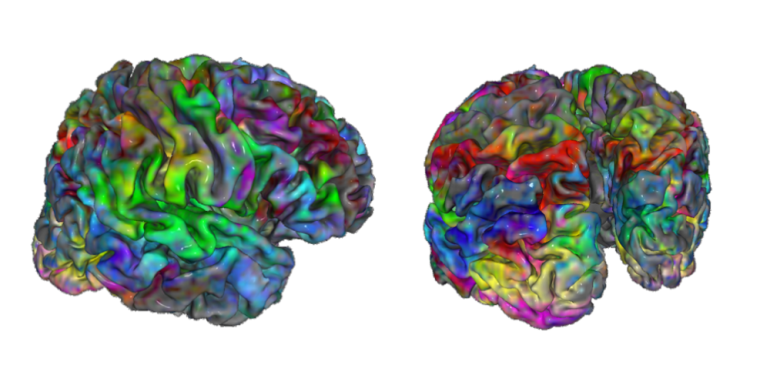

Soon after Takagi and his study lover Shinji Nishimoto constructed a straightforward product to “translate” brain exercise into a readable structure, Steady Diffusion was equipped to deliver superior-fidelity visuals that bore an uncanny resemblance to the originals.

The AI could do this despite not staying shown the pics in advance or educated in any way to manufacture the results.

“We seriously didn’t assume this kind of outcome,” Takagi explained.

Takagi pressured that the breakthrough does not, at this stage, stand for mind-looking through – the AI can only create images a particular person has considered.

“This is not brain-examining,” Takagi mentioned. “Unfortunately there are quite a few misunderstandings with our study.”

“We can not decode imaginations or goals we imagine this is way too optimistic. But, of training course, there is potential in the foreseeable future.”

But the advancement has even so lifted issues about how such know-how could be made use of in the long term amid a broader debate about the hazards posed by AI commonly.

In an open letter final month, tech leaders which include Tesla founder Elon Musk and Apple co-founder Steve Wozniak identified as for a pause on the growth of AI due to “profound hazards to culture and humanity.”

Despite his pleasure, Takagi acknowledges that fears about brain-looking through engineering are not without having merit, given the possibility of misuse by those with malicious intent or without the need of consent.

“For us, privateness troubles are the most critical detail. If a federal government or institution can study people’s minds, it’s a quite delicate situation,” Takagi explained. “There desires to be significant-stage conversations to make positive this cannot take place.”

Takagi and Nishimoto’s exploration produced much buzz in the tech local community, which has been electrified by breakneck breakthroughs in AI, which includes the release of ChatGPT, which generates human-like speech in reaction to a user’s prompts.

Their paper detailing the findings ranks in the top 1 per cent for engagement amid the additional than 23 million investigate outputs tracked to date, according to Altmetric, a facts enterprise.

The review has also been accepted to the Convention on Computer Vision and Pattern Recognition (CVPR), established for June 2023, a popular route for legitimising major breakthroughs in neuroscience.

Even so, Takagi and Nishimoto are cautious about having carried absent about their findings.

Takagi maintains that there are two major bottlenecks to authentic mind examining: brain-scanning technological know-how and AI alone.

Regardless of advancements in neural interfaces – such as Electroencephalography (EEG) brain personal computers, which detect brain waves by way of electrodes linked to a subject’s head, and fMRI, which steps mind exercise by detecting modifications connected with blood circulation – scientists feel we could be decades absent from remaining able to properly and reliably decode imagined visible experiences.

In Takagi and Nishimoto’s analysis, subjects had to sit in an fMRI scanner for up to 40 several hours, which was high priced as perfectly as time-consuming.

In a 2021 paper, researchers at the Korea State-of-the-art Institute of Science and Technology noted that regular neural interfaces “lack continual recording stability” due to the smooth and complicated mother nature of neural tissue, which reacts in uncommon ways when introduced into call with artificial interfaces.

In addition, the researchers wrote, “Current recording procedures frequently count on electrical pathways to transfer the sign, which is susceptible to electrical noises from surroundings. Mainly because the electrical noises drastically disturb the sensitivity, accomplishing good indicators from the concentrate on location with significant sensitivity is not however an easy feat.”

Present-day AI limitations existing a next bottleneck, whilst Takagi acknowledges these capabilities are advancing by the day.

“I’m optimistic for AI but I’m not optimistic for brain technological innovation,” Takagi reported. “I imagine this is the consensus among the neuroscientists.”

Takagi and Nishimoto’s framework could be utilized with mind-scanning gadgets other than MRI, this kind of as EEG or hyper-invasive technologies like the mind-laptop implants staying formulated by Elon Musk’s Neuralink.

Even so, Takagi believes there is at the moment very little practical application for his AI experiments.

For a commence, the process can not yet be transferred to novel subjects. Mainly because the form of the mind differs amongst folks, you can not specifically utilize a product created for one particular human being to one more.

But Takagi sees a foreseeable future exactly where it could be employed for scientific, conversation or even entertainment uses.

“It’s challenging to forecast what a thriving scientific software may possibly be at this stage, as it is nevertheless quite exploratory research,” Ricardo Silva, a professor of computational neuroscience at University College London and investigation fellow at the Alan Turing Institute, advised Al Jazeera.

“This could change out to be one particular further way of developing a marker for Alzheimer’s detection and progression analysis by examining in which methods one could location persistent anomalies in pictures of visual navigation responsibilities reconstructed from a patient’s brain activity.”

Silva shares issues about the ethics of know-how that could one working day be made use of for genuine mind reading.

“The most pressing concern is to which extent the information collector really should be pressured to disclose in full depth the employs of the data gathered,” he mentioned.

“It’s just one matter to indicator up as a way of getting a snapshot of your young self for, possibly, long term scientific use… It’s yet another absolutely distinctive matter to have it employed in secondary duties these types of as advertising and marketing, or even worse, used in lawful circumstances versus someone’s have pursuits.”

However, Takagi and his spouse have no intention of slowing down their investigation. They are previously preparing edition two of their task, which will emphasis on improving upon the technological know-how and applying it to other modalities.

“We are now developing a significantly greater [image] reconstructing approach,” Takagi said. “And it is happening at a extremely immediate tempo.”